The Australian Medical Council is an organisation whose work impacts across the lands of Australia and New Zealand.

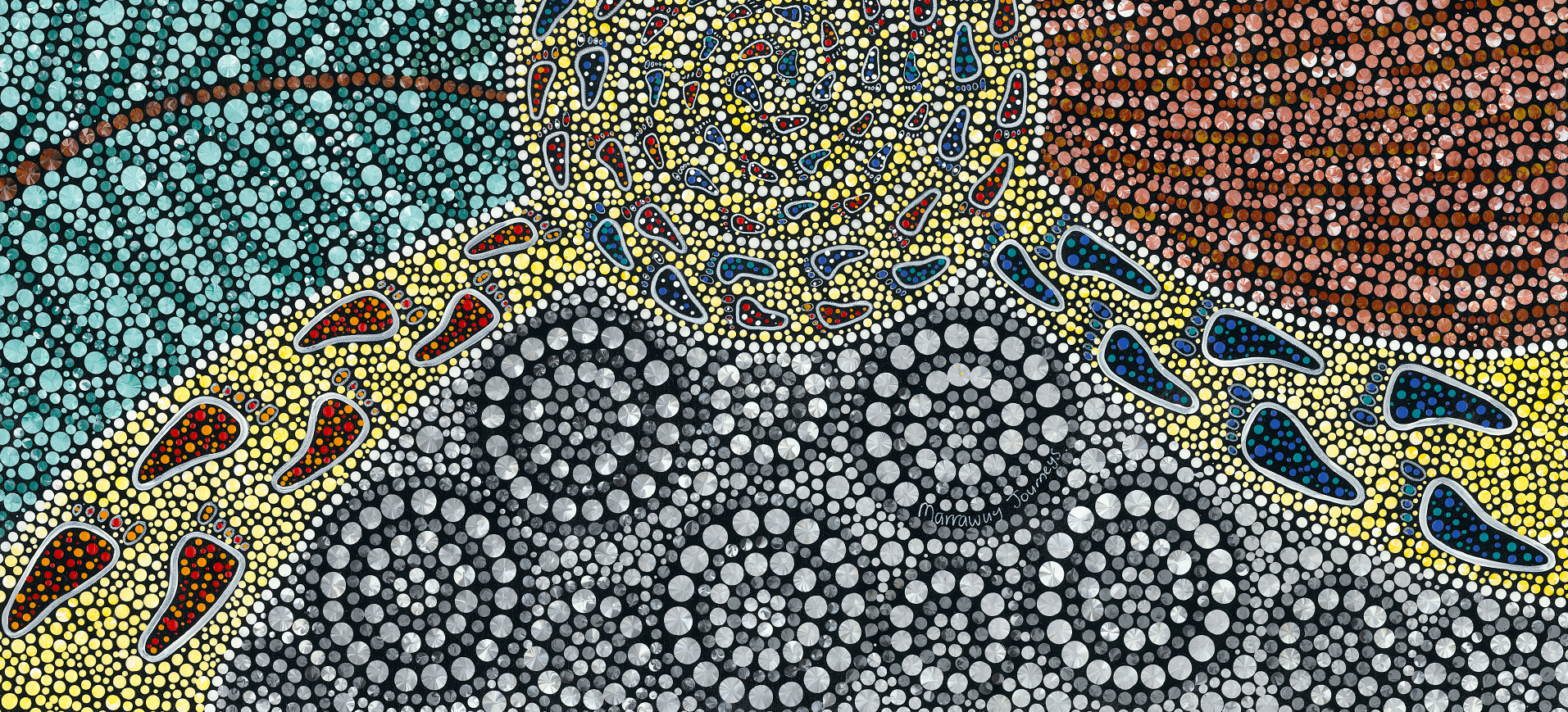

The Australian Medical Council acknowledges the Aboriginal and/or Torres Strait Islander Peoples as the original Australians and the Māori People as the tangata whenua (Indigenous) Peoples of Aotearoa (New Zealand). We recognise them as the traditional custodians of knowledge for these lands.

We pay our respects to them and to their Elders, both past, present and emerging, and we recognise their enduring connection to the lands we live and work on, and honour their ongoing connection to those lands, its waters and sky.

Aboriginal and/or Torres Strait Islander people should be aware that this website may contain images, voices and names of people who have passed away.